In today’s competitive digital landscape, it’s not enough to simply publish content and hope search engines discover it. Even the best-optimized website can suffer from hidden crawl and indexation issues that prevent pages from ranking effectively. These problems often lurk in the background, invisible to the casual observer, yet they can have a significant impact on organic traffic. Tools like SEOsets provide marketers and website owners with actionable insights to uncover and resolve these hidden issues, ensuring that every page has the best chance to perform in search results.

Understanding Crawl and Indexation Issues

Before diving into detection and fixes, it’s crucial to understand what crawl and indexation issues are and why they matter.

- Crawl Issues occur when search engine bots cannot access your website pages efficiently. This can happen due to broken links, incorrect redirects, server errors, or disallowed pages in your robots.txt file.

- Indexation Issues arise when pages that are accessible are not included in search engine indexes. Common causes include duplicate content, thin content, noindex tags, or canonical errors.

The combination of crawl and indexation problems can prevent valuable content from appearing in search results, reducing visibility, traffic, and conversions.

How SEOsets Helps Identify Hidden Issues

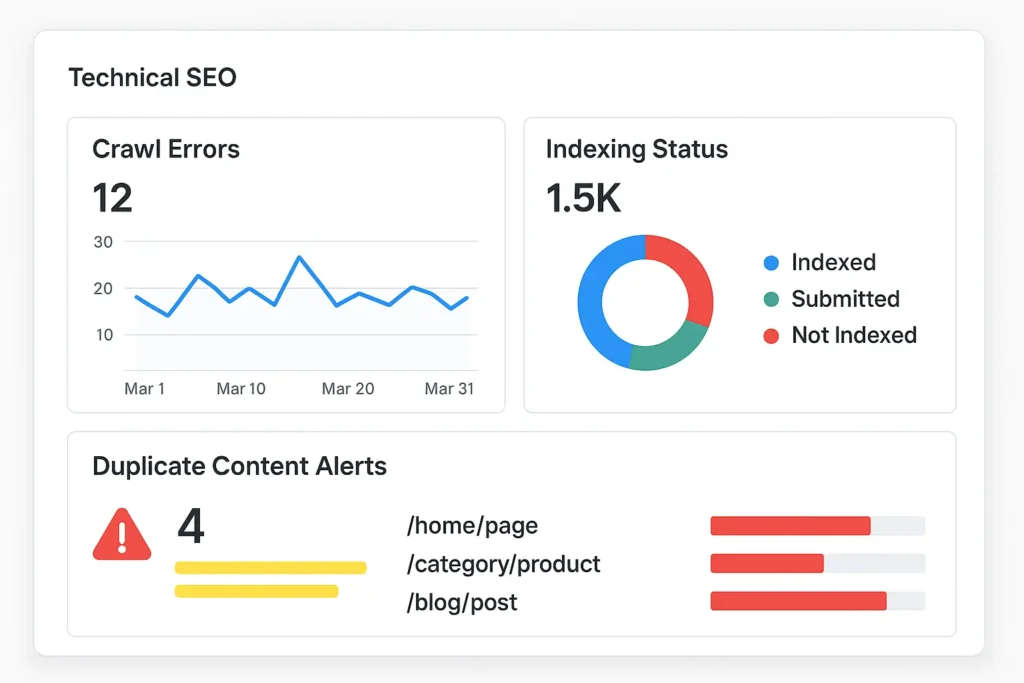

Manually auditing crawl and indexation can be time-consuming and error-prone. SEOsets automates this process and offers precise, actionable insights to detect issues that are often overlooked:

- Crawlability Reports – SEOsets analyzes your site structure and highlights areas where search engine bots may face difficulties. This includes broken internal links, orphan pages, and pages blocked by robots.txt.

- Indexation Analysis – The platform identifies pages that should be indexed but are not, highlighting potential noindex tags, canonical conflicts, or content quality concerns.

- Duplicate Content Detection – Duplicate or near-duplicate content can confuse search engines and hinder indexing. SEOsets flags these issues, helping you consolidate pages and improve indexing efficiency.

- Server and URL Errors – SEOsets monitors server response codes, redirects, and URL errors. Pages returning 404 or 500 errors can negatively impact crawl efficiency, and resolving these issues improves overall SEO health.

By leveraging these insights, webmasters can proactively address issues before they escalate, ensuring their content is fully crawlable and indexable.

Common Crawl and Indexation Problems and How to Fix Them

1. Broken Links and Orphan Pages

Broken links create dead ends for search engines, while orphan pages (pages with no internal links pointing to them) are often overlooked during crawling. SEOsets identifies these pages and allows you to create a strategy to fix or properly link them.

Fix: Update or remove broken links, and integrate orphan pages into your site’s navigation to improve discoverability.

2. Noindex and Canonical Conflicts

Sometimes, pages meant to rank are mistakenly tagged with noindex or conflicting canonical URLs, preventing indexing. SEOsets highlights these inconsistencies.

Fix: Review tags and canonical links, ensuring only duplicate or low-value pages carry noindex, and canonicalization is accurate for similar content.

3. Slow or Erratic Server Responses

Search engines may reduce crawl frequency for websites with slow load times or frequent server errors. SEOsets tracks server responses and alerts you to any critical issues.

Fix: Optimize server performance, implement caching, and resolve error codes promptly.

4. Duplicate and Thin Content

Duplicate content dilutes SEO value, while thin content offers minimal value to users. Both issues can hinder indexation.

Fix: Consolidate duplicate pages using 301 redirects or canonical tags, and enhance thin content with relevant, high-quality information.

5. Sitemap and Robots.txt Issues

A misconfigured sitemap or robots.txt file can block important pages from being crawled. SEOsets audits these files to ensure they align with SEO goals.

Fix: Update sitemaps with all critical URLs, and review robots.txt to remove unnecessary restrictions.

The Role of Continuous Monitoring

Crawl and indexation issues are rarely static. Websites are dynamic, with content updates, plugin changes, and technical adjustments that can introduce new problems. Continuous monitoring is key:

- Regular SEO Audits: Use SEOsets to perform routine checks, identifying new crawl errors or indexation gaps.

- Automated Alerts: Enable alerts for server errors, broken links, and indexing issues to act quickly.

- Performance Reporting: Track the impact of fixes on organic traffic and indexing to validate your SEO strategy.

Consistent monitoring ensures your website remains healthy, accessible, and optimized for search engines over time.

Implementing Fixes Strategically

While detection is critical, implementing solutions effectively is equally important. Here’s a strategic approach:

- Prioritize High-Impact Pages: Focus on pages that drive traffic or conversions first.

- Document Changes: Keep a record of fixes to track improvements and prevent recurring issues.

- Test Before Deployment: Use staging environments to test changes, minimizing errors on live pages.

- Measure Impact: Monitor indexing improvements, crawl rates, and traffic to gauge success.

SEOsets makes this process simpler by providing actionable recommendations and integrating easily into your workflow.

Why SEOsets is the Go-To Tool

While there are several SEO tools available, SEOsets offers a unique combination of depth, accuracy, and user-friendliness. Its comprehensive audit reports uncover issues often overlooked by traditional tools. By integrating technical SEO, content analysis, and indexing insights in one platform, it empowers website owners and digital marketers to maintain optimal site health without constant manual checks.

Ready to optimize your website for full visibility? Explore SEOsets and start detecting hidden crawl and indexation issues today.

FAQs

Q1: How often should I check for crawl and indexation issues?

Ideally, run an audit monthly or after significant website changes. Continuous monitoring helps catch issues before they impact SEO performance.

Q2: Can crawl issues affect my rankings even if content is high quality?

Yes. Even the best content cannot rank if search engines cannot access or index it efficiently.

Q3: Are duplicate content issues always harmful?

Not always. Some duplication, like product descriptions across e-commerce sites, is common. However, excessive duplication can confuse search engines and dilute ranking signals.

Q4: How long does it take for fixes to reflect in search results?

Improvements can appear within a few days to a few weeks, depending on crawl frequency and page authority.

Q5: Do I need technical knowledge to use SEOsets?

Basic SEO knowledge helps, but SEOsets is designed to provide clear, actionable insights that can be implemented with minimal technical expertise.